Marcus Ghosh

@marcusghosh

Postdoctoral Fellow (@ImperialX_AI, @SchmidtFutures).

Working on multisensory integration with @neuralreckoning.

🐘 @[email protected]

ID: 1140273182651428865

https://profiles.imperial.ac.uk/m.ghosh 16-06-2019 15:01:26

356 Tweet

947 Followers

568 Following

*Fully funded PhD position opening*💥 I have an opening for my first PhD student to come to Imperial College London, co-supervised with Dan Goodman, working on new projects in neuro-AI 🧠 Must be UK home fees status (3 yrs in UK prior). Plz share! Further details below 👇👇

Really looking forward to sharing some recent work ICNS (Institute of Comput Neurosci, UKE Hamburg) today (15:00 CEST). Join virtually here: uni-hamburg.zoom.us/meeting/regist…

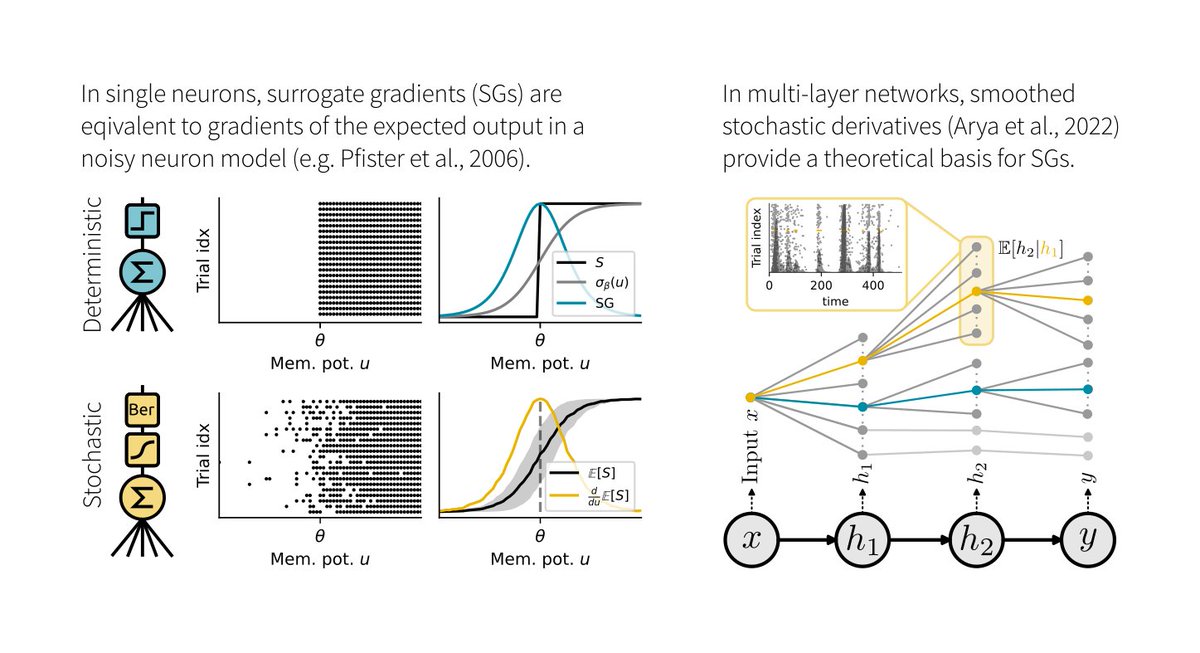

1/6 Surrogate gradients (SGs) are empirically successful at training spiking neural networks (SNNs). But why do they work so well, and what is their theoretical basis? In our new preprint led by Julia Gygax, we provide the answers: arxiv.org/abs/2404.14964

Awesome to see two Zebrafish Rock! papers out from my old colleagues @uclcdb this week (Anya Suppermpool, The Rihel lab, Stephen Wilson etc): ⚪️ Sleep pressure and synapses (doi.org/10.1038/s41586…) ⚪️ Neuronal asymmetry (doi.org/10.1126/scienc…)

Really enjoyed this thoughtful pair of articles, from Máté Lengyel & Jonathan Pillow, on the usefulness of Marr's three levels in #neuroscience: ❤️doi.org/10.1113/JP2795… ❤️🩹doi.org/10.1113/JP2795…

2 years into making, the last chapter of my PhD thesis, and a set of wonderful collaborators: @m00rcheh, @ShreyDixitAI, Bratislav Misic, Caio Seguin, G. Zamora-López, Claus C Hilgetag. 🧵 What can we learn about communication in brain networks from 7000 million virtual lesions:

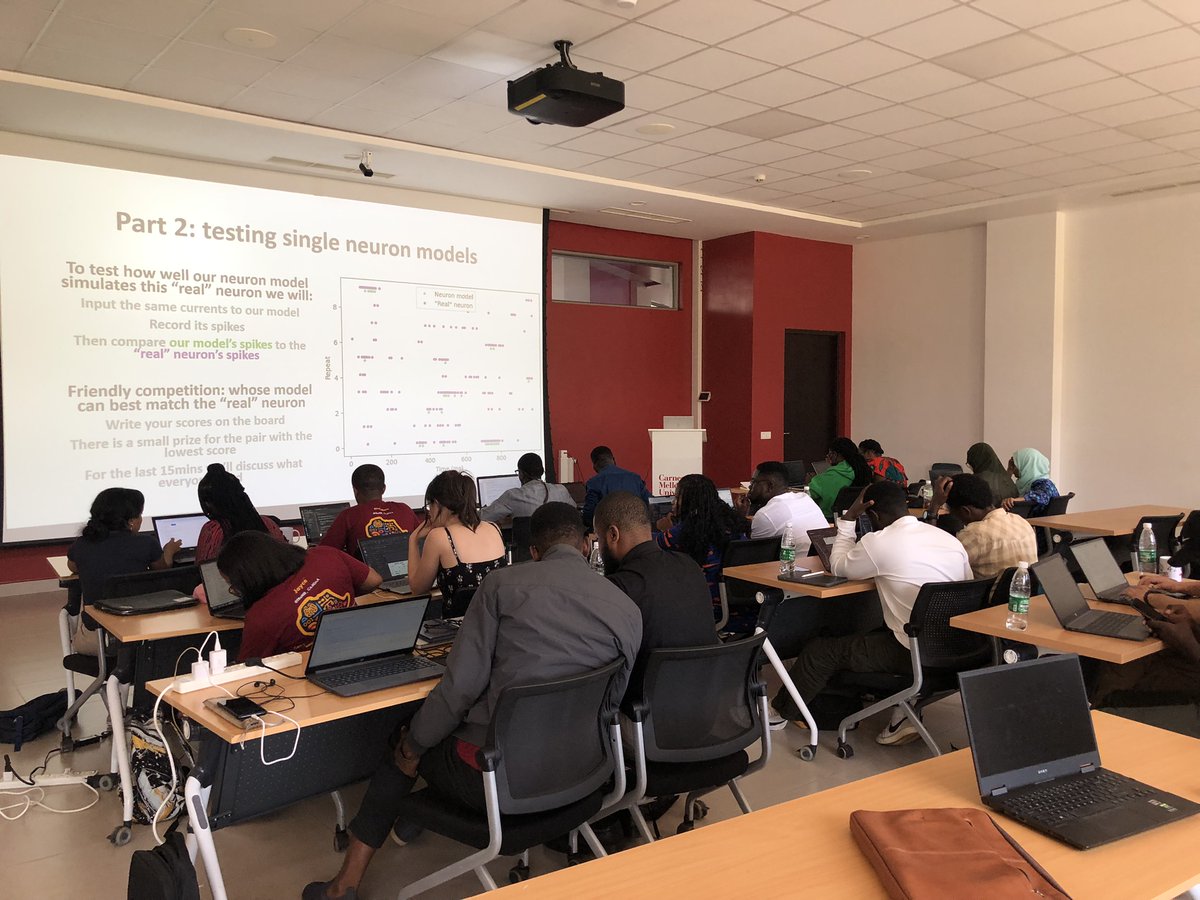

Teaching on the TReND-CaMinA (TReND in Africa) summer school (Rwanda) was an incredible experience. Both the students and staff (below) were super inspiring! Saray Soldado-Magraner, Joana Soldado-Magraner, Tom George, Artemis Koumoundourou, Burak Gür, Tom Chartrand, Po-Chen (Frederic) Kuo, Devon Jarvis etc

I hadn’t really thought about the variety of algorithms that *could* optimally underpin multi-modal processing/integration, until this awesome work by Marcus Ghosh & team. Clear findings w/ experimentally testable predictions on nonlinear fusion in agents & organisms! 👌

How should we support research software engineers? One idea from Rory Byrne!